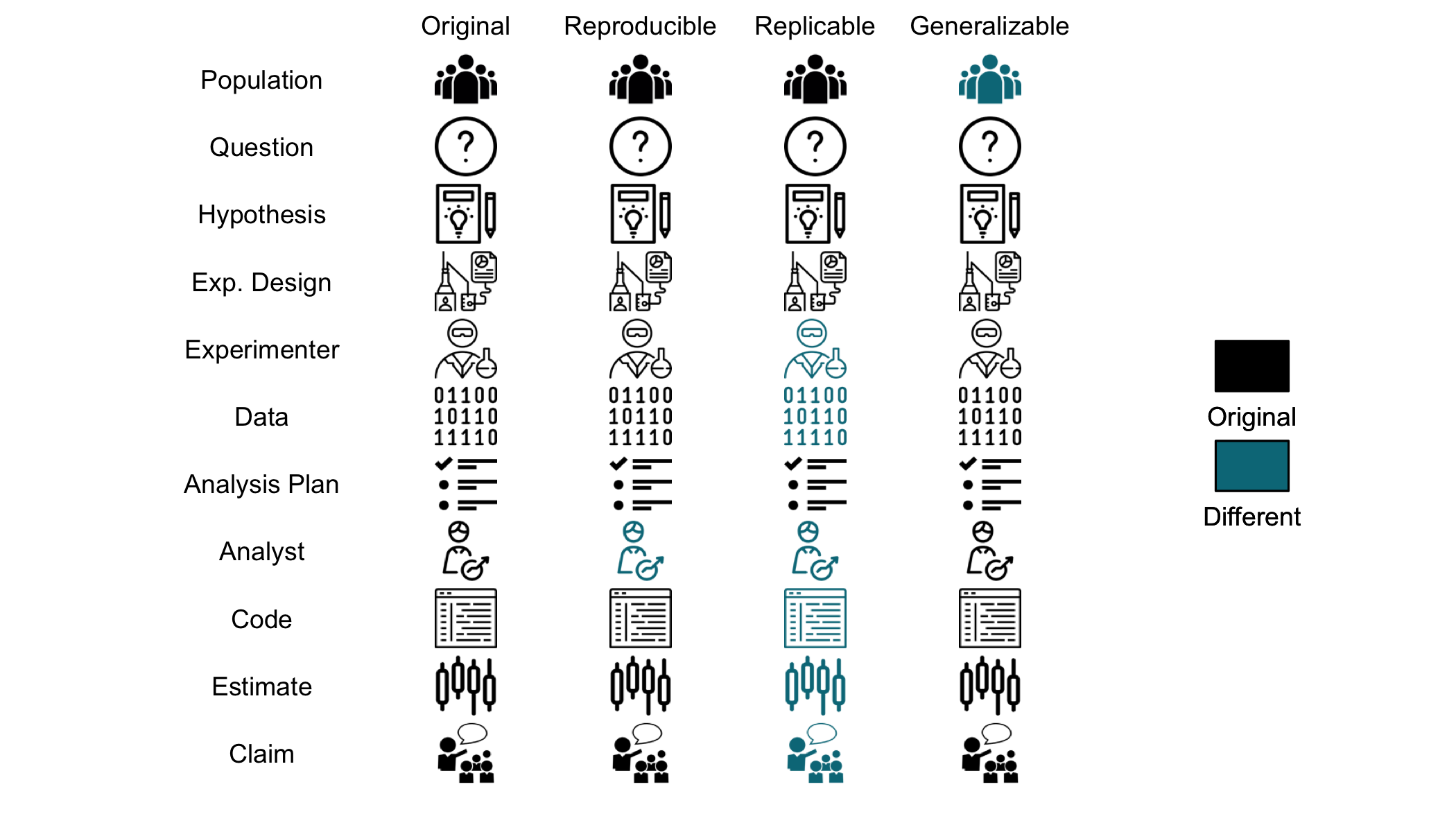

class: left, middle, inverse, title-slide .title[ # Motivation ] .author[ ### David Benkeser, PhD MPH<br> <span style="font-size: 50%;"> Emory University<br> Department of Biostatistics and Bioinformatics </span> ] .date[ ### INFO550<br><br><svg viewBox="0 0 512 512" style="height:1em;position:relative;display:inline-block;top:.1em;fill:#ffffff;" xmlns="http://www.w3.org/2000/svg"> <path d="M326.612 185.391c59.747 59.809 58.927 155.698.36 214.59-.11.12-.24.25-.36.37l-67.2 67.2c-59.27 59.27-155.699 59.262-214.96 0-59.27-59.26-59.27-155.7 0-214.96l37.106-37.106c9.84-9.84 26.786-3.3 27.294 10.606.648 17.722 3.826 35.527 9.69 52.721 1.986 5.822.567 12.262-3.783 16.612l-13.087 13.087c-28.026 28.026-28.905 73.66-1.155 101.96 28.024 28.579 74.086 28.749 102.325.51l67.2-67.19c28.191-28.191 28.073-73.757 0-101.83-3.701-3.694-7.429-6.564-10.341-8.569a16.037 16.037 0 0 1-6.947-12.606c-.396-10.567 3.348-21.456 11.698-29.806l21.054-21.055c5.521-5.521 14.182-6.199 20.584-1.731a152.482 152.482 0 0 1 20.522 17.197zM467.547 44.449c-59.261-59.262-155.69-59.27-214.96 0l-67.2 67.2c-.12.12-.25.25-.36.37-58.566 58.892-59.387 154.781.36 214.59a152.454 152.454 0 0 0 20.521 17.196c6.402 4.468 15.064 3.789 20.584-1.731l21.054-21.055c8.35-8.35 12.094-19.239 11.698-29.806a16.037 16.037 0 0 0-6.947-12.606c-2.912-2.005-6.64-4.875-10.341-8.569-28.073-28.073-28.191-73.639 0-101.83l67.2-67.19c28.239-28.239 74.3-28.069 102.325.51 27.75 28.3 26.872 73.934-1.155 101.96l-13.087 13.087c-4.35 4.35-5.769 10.79-3.783 16.612 5.864 17.194 9.042 34.999 9.69 52.721.509 13.906 17.454 20.446 27.294 10.606l37.106-37.106c59.271-59.259 59.271-155.699.001-214.959z"></path></svg> <a href="https://bit.ly/info550">.white[bit.ly/info550]</a> <br> <svg viewBox="0 0 448 512" style="height:1em;position:relative;display:inline-block;top:.1em;fill:#ffffff;" xmlns="http://www.w3.org/2000/svg"> <path d="M448 360V24c0-13.3-10.7-24-24-24H96C43 0 0 43 0 96v320c0 53 43 96 96 96h328c13.3 0 24-10.7 24-24v-16c0-7.5-3.5-14.3-8.9-18.7-4.2-15.4-4.2-59.3 0-74.7 5.4-4.3 8.9-11.1 8.9-18.6zM128 134c0-3.3 2.7-6 6-6h212c3.3 0 6 2.7 6 6v20c0 3.3-2.7 6-6 6H134c-3.3 0-6-2.7-6-6v-20zm0 64c0-3.3 2.7-6 6-6h212c3.3 0 6 2.7 6 6v20c0 3.3-2.7 6-6 6H134c-3.3 0-6-2.7-6-6v-20zm253.4 250H96c-17.7 0-32-14.3-32-32 0-17.6 14.4-32 32-32h285.4c-1.9 17.1-1.9 46.9 0 64z"></path></svg> <a href="https://benkeser.github.io/info550/readings#reproducible-research">.white[Additional reading]</a> ] --- <style type="text/css"> .remark-slide-content { font-size: 22px } </style> ## An email you'll receive .center[ .monobox[ .left[ David, <br> <br> After review, we found that some data may have been mislabeled. Could you re-run the analyses and update numbers in Tables 1-3 and re-create Figure 2? <br> <br> We would like to submit this paper by the end of the week. <br> <br> Best wises, <br> Every collaborator ever ] ] ] ??? Very commonly in collaborations, the data change. It could be due to errors, more data being added, etc... The goal of this class is to make it so you do not fill with dread when you receive such an e-mail. We are working toward a world where you download the new data, type one command and voila! No by-hand entering numbers into tables. No copy-pasting from R/SAS/STATA to Word. Just one click. --- ## Another email you'll receive .center[ .monobox[ .left[ David, <br> <br> The data will be finalized tomorrow and we need to have new Tables to the DSMB report in three days. Sorry about that. <br> <br> Best wises, <br> Every collaborator ever ] ] ] ??? To prepare for situations like this, project organization is key. We will discuss strategies for preparing fast-turnaround projects. But just because we are turning projects around quickly, does not mean we are not working carefully. By having a set, reproducible workflow in place before data acquisition, we can have the best of both worlds. Careful analysis planning and execution AND fast delivery. --- ## Another email you'll receive .center[ .monobox[ .left[ David, <br> <br> IT just updated R to the latest version on my computer and your analysis code no longer runs. I get lots of strange error messages about compilers and package versions. What gives? <br> <br> Best wises, <br> Every collaborator ever ] ] ] ??? Collaborative projects with multiple people developing and running code can be challenging to manage. We'll discuss the tools that make this easier to achieve. --- ## Another email you'll receive .center[ .monobox[ .left[ David, <br> <br> I finally managed to debug all the compilation errors, but now when I re-run the code, I get completely different results. I'm starting to panic. Help! <br> <br> Best wises, <br> Every collaborator ever ] ] ] ??? Software version control is another big challenge, particularly as more modern learning techniques (e.g., machine learning) are applied. Small changes in an algorithm, in how random seeds are set, etc... can lead to possibly large changes in down-stream analyses. We'll discuss containerization tools that make all of these problems tractable. --- ## Reproducibility <!-- --> .center[ .small[ Working terminology (figure produced using [scifigure](https://cran.r-project.org/web/packages/scifigure/vignettes/Visualizing_Scientific_Replication.html) R package) ] ] ??? There are many definitions of reproducibility/replicability/etc... See the [class readings](https://benkeser.github.io/info550/readings#reproducible-research) for more. We will focus on building analyses that satisfy the reproducibility definition outlined above. Could a different analyst sit down with your code, execute it and get exactly the same results? Reproducibility is a *minimal* standard. Just because something is *reproducible* does not necessarily imply that it is *correct*. The code may have bugs. The methods may be poorly behaved. But reproducibility is likely correlated with correctness -- if you are careful enough to make everything reproducible, you're probably more likely to be careful in designing the analysis in the first place. We will use replicability to mean a new experiment using the same methods coming to the same conclusions. Generalizability refers to taking conclusions from your study population and generalizing them to a new population. --- class: title-slide, center, inverse, middle background-color: #84754e *You mostly collaborate with yourself, and me-from-two-months-ago never responds to email.* <div> - Karen Cranston --- ## Why should I care? * Careful coding means more likely to produce __correct results__. * In the long run, you will __save (a lot of) time__. * Higher __impact__ of your science. * __Avoid embarrassment__ on a public stage. ??? Doing reproducible science is time consuming, but is ultimately time-saving. As you practice these tools more and more, they will start to become habits. I do analyses now in a day or so (and at much higher quality) now that would take me a (frustrating) week seven or eight years ago. One of my great fears as an academic is being accused of intentionally doing bad science. Leaving a paper trail that any one else can follow is a bit intimidating -- we all make mistakes. Reproducible work indicates that any mistakes made were likely honest. --- class: title-slide, center, inverse, middle background-color: #d9d9d6 *This is not rocket science. There is computer code that evaluates the algorithm. There is data. And when you plug the data into that code, you should be able to get the answers back that you have reported. And to the extent that you can’t do that, there is a problem in one or both of those items.* <div> - Lisa McShane --- ## Levels of reproducibility * Are the tables and figures reproducible using the code? * Are the tables and figures reproducible using the code __with minimal user effort__? * Does the code __actually do__ what's written in Methods? * Does the code make clear __why__ certain decisions were made? * Why did you exclude this variable? * Why do you choose these tuning parameters? * Can the code be used on other data? * Can the code be extended to do more? ??? Reproducibility is not black and white. The ideal is not easy to achieve, nor is it necessarily warranted on every project. This is a lifelong process of understanding what level of reproducibility is required for each project. ## Basic principles * Get data in its "raw-est" form possible * __Document__ where/when you got the data * Be as __self-sufficient__ as possible * __Everything via code__ * with sufficient documentation! * __Everything automated__ * with sufficient documentation! ??? The goal is end-to-end reproducibility. In many situations, *you* will be the person with the most data management experience. Reproducibility can easily be undermined by e.g., a collaborator who is manually extracting data from an online data base and copy-pasting it into a .csv for you. Solution: Code the download process! Automate data pulls! Document how you're doing it! --- ## Things to avoid * Open a file to re-save as `.csv`. * Open a data file and manually edit. * Pasting results into the text of a manuscript. * Copy-paste-edit code into GUI * Copy-paste-edit tables * Copy-paste-adjust figures ??? All of these actions are problematic. Each introduces the possibility of inconsistencies or errors and is not reproducible in the sense that we mean. --- class: title-slide, center, inverse, middle background-color: #007dba *If you do anything "by hand" once, you'll have to do it 100 times.* <div> - Karl Broman ??? So true. --- ## Tools for reproducible research * Unix command line * git and online repositories (e.g., GitHub) * Markdown, RMarkdown, Latex, Knitr * GNU Make * R packages * Containers (e.g., Docker) * `bash`, `R`, `python` (`ruby`, `perl`, `html`) ??? In this class, we'll be learning to operate almost exclusively on the command line. Soon, [this will be you](https://tenor.com/8IZG.gif). Git will make your life easier by handling version control. No more directories with 200 copies of the same file with different dates! RMarkdown + knitr are great tools for generating reproducible reports (or course notes...). GNU Make is for automation and for documenting dependencies between files. `R` packages are a useful way to wrap up code, documentation, and dependencies on other `R` packages. Containers make it possible to achieve full reproducibility rather painlessly, by wrapping all needed software (down to the OS!) up into a "container". Knowing some `bash` is necessary to operate at the command line and generally useful for scripting. `R` is becoming (or already is) a standard software for analysis. `python` is also becoming quite popular for analysis, but goes much beyond. Other languages are great, but not totally necessary. --- class: title-slide, center, inverse, middle background-color: #487f84 *The most important tool is the mindset, when starting, that the end product will be reproducible.* <div> - Keith Baggerly ??? If you don't know who Keith Baggerly is, [read this reproducibility horror story](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3474449/).